AI Falls Apart With One Word: “Not”

Can AI really understand what it sees and hears? Not quite — at least not when that little word “not” is involved.

A bombshell study from the MIT Computer Science and Artificial Intelligence Laboratory (CSAIL) has just uncovered a gaping hole in the way state-of-the-art vision-language models (VLMs) interpret human queries. Despite being hailed as the next step in AI-human interaction, these powerful models — used in tools like ChatGPT with vision, Gemini, and Claude — fail catastrophically when faced with basic negation.

“These models don’t just struggle with negation — they break,” said MIT CSAIL researcher Yoon Kim, co-author of the study.

The research team tested popular VLMs by showing them images and asking simple yes/no questions such as:

“Is the cat not on the bed?”

The models could correctly answer the affirmative version:

“Is the cat on the bed?”

But when “not” entered the picture, the systems consistently got it wrong. In some cases, the accuracy dropped by over 20 percentage points. That’s a massive fail rate — and a dangerous one if these systems are used in sensitive sectors like healthcare, security, or autonomous vehicles.

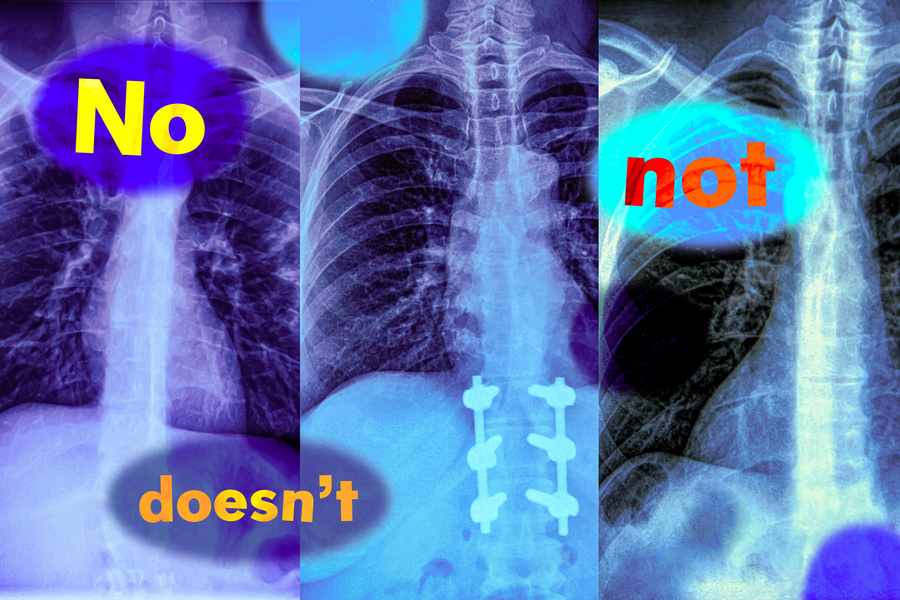

Negation is everywhere in human language. From "The patient does not have cancer" to "No weapons detected", missing the meaning of “not” can flip the truth — with potentially life-threatening consequences.

In the medical field, for example, vision-language models are increasingly being integrated into diagnostic systems. But if a system misreads “no signs of fracture” as “signs of fracture,” or the reverse, it could drive fatal decisions.

While today’s AI models are astonishingly good at pattern recognition, this study highlights a fundamental limitation: a shallow grasp of language semantics. The researchers argue that these systems don’t truly understand language logic — they just mimic patterns in training data.

This isn’t just a minor glitch. It’s a systemic vulnerability baked into how these models are built.

The team behind the study calls for rethinking evaluation methods for multimodal AI and developing architectures that can handle basic logic and compositional reasoning — not just statistical mimicry.

Until then, users — especially in high-stakes applications — need to treat these AI systems as brittle tools, not infallible oracles.