ChatGPT Just Got 60% Faster, Thanks to a Tiny AI Powerhouse

Artificial intelligence just got a serious speed upgrade. Researchers at KAIST have unveiled a new neural processing unit (NPU) core architecture that accelerates ChatGPT-style inference by 60%, all while reducing energy consumption. The impact? Generative AI could soon run smoother, faster, and more affordably than ever before.

This isn’t just about shaving milliseconds. Inference speed, the time it takes an AI model to respond, dictates how usable, scalable, and efficient these systems are in real-world applications. A 60% jump is more than an incremental boost. It’s a leap that could redefine the economics and user experience of AI deployment, especially on devices with limited power or compute capacity.

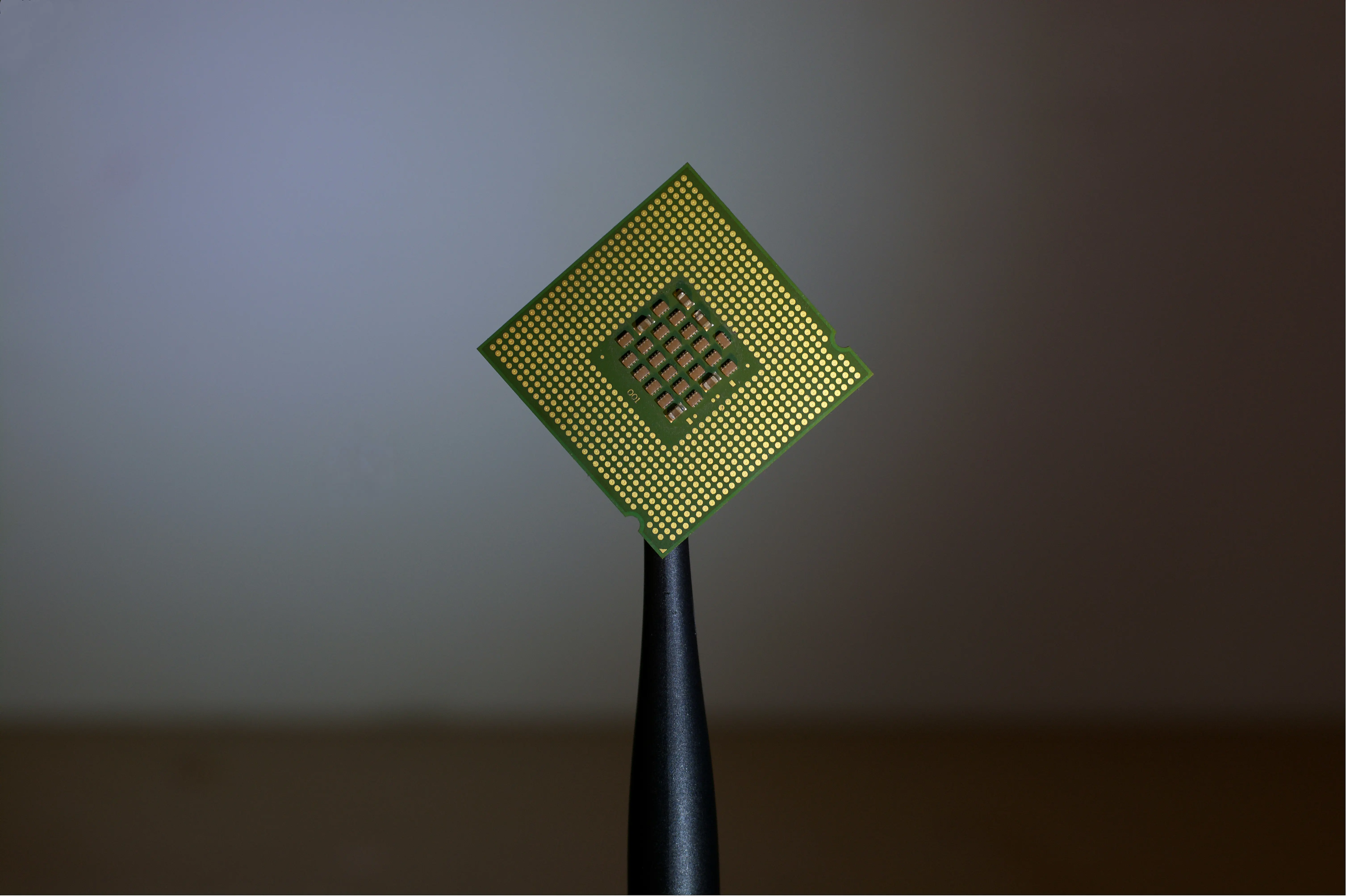

At the heart of the innovation is a flexible, high-throughput NPU core built for matrix-heavy transformer models, the same kind that power ChatGPT, Gemini, Claude, and other large language models. Unlike traditional chips, this NPU can dynamically adapt to different layer sizes and computation patterns, minimizing bottlenecks during processing.

This means AI could soon run not just in data centers, but on laptops, smartphones, and edge devices, bringing real-time generative capabilities to everyday hardware. Imagine smart assistants that don’t lag, AI copilots that respond instantly, or on-device translation that feels like a conversation, not a delay.

There’s also a green upside. The new chip design slashes energy use per inference, which is key as AI workloads become one of the tech industry’s fastest-growing energy sinks. More efficient inference could mean more sustainable AI at scale.

While the tech is still in the research stage, the potential is enormous. As models grow, so too does the demand for hardware that can keep up without burning out. KAIST’s design shows that clever architecture, not just raw compute, is the way forward.

In the race to make AI faster, lighter, and more accessible, this tiny chip might just change the game.